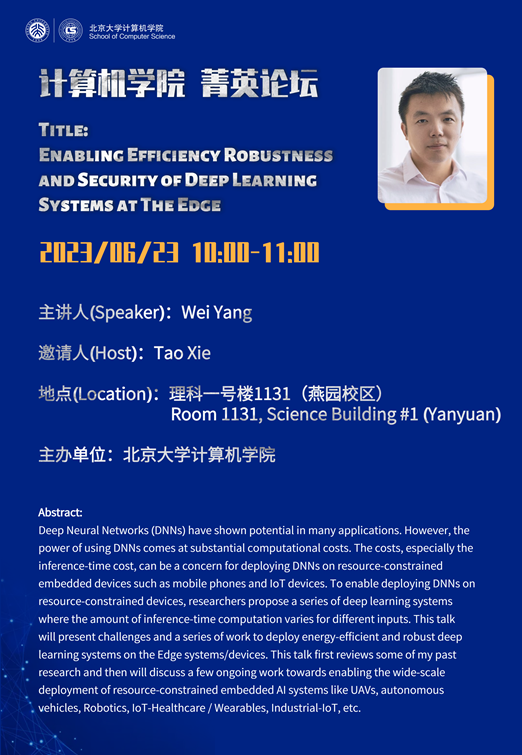

Speaker: Wei Yang

Time: 10:00-11:00 a.m., June 23, 2023, GMT+8

Venue: Room 1131, Science Building #1 (Yanyuan)

Abstract:

Deep Neural Networks (DNNs) have shown potential in many applications. However, the power of using DNNs comes at substantial computational costs. The costs, especially the inference-time cost, can be a concern for deploying DNNs on resource-constrained embedded devices such as mobile phones and IoT devices. To enable deploying DNNs on resource-constrained devices, researchers propose a series of deep learning systems where the amount of inference-time computation varies for different inputs. This talk will present challenges and a series of work to deploy energy-efficient and robust deep learning systems on the Edge systems/devices. This talk first reviews some of my past research and then will discuss a few ongoing work towards enabling the wide-scale deployment of resource-constrained embedded AI systems like UAVs, autonomous vehicles, Robotics, IoT-Healthcare / Wearables, Industrial-IoT, etc.

Biography:

Wei Yang is an assistant professor in the Department of Computer Science at the University of Texas at Dallas. He teaches and does research on software engineering and security. He received my Ph.D. in Computer Science from the University of Illinois at Urbana-Champaign, an M.S. in Computer Science from North Carolina State University, and a B.E. in Software Engineering from Shanghai Jiao Tong University. He was a visiting researcher in University of California, Berkeley. He is a recipient of numerous awards including NSF CAREER Award and ACM SIGSOFT Distinguished Paper Award.

Source: School of Computer Sciences