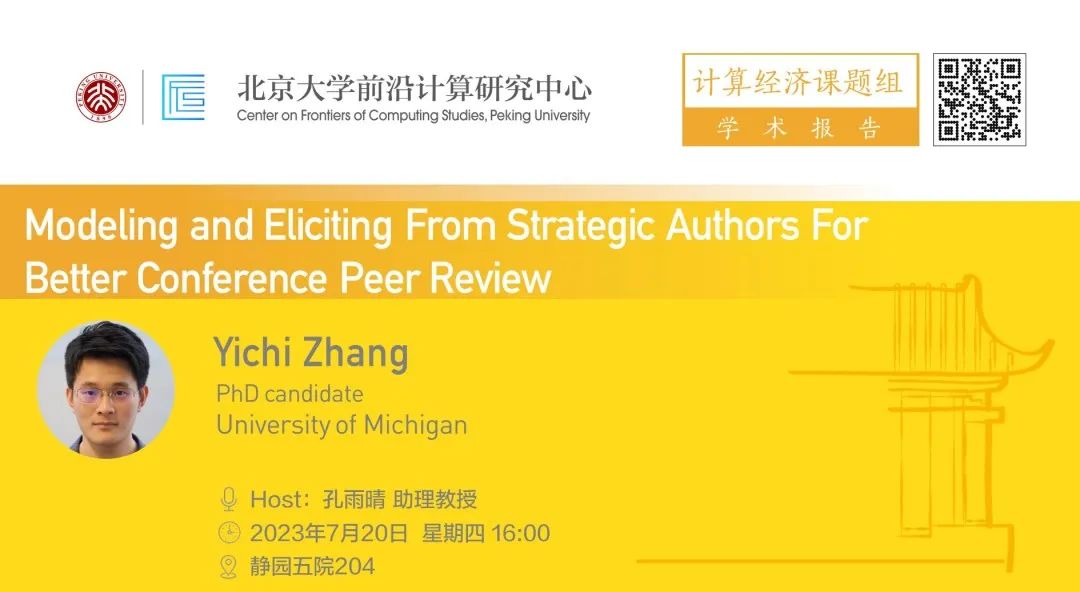

Speaker: Yichi Zhang, University of Michigan

Time: 16:00 p.m., July 20, 2023, GMT+8

Venue: Scan the QR code to watch online

Abstract:

The process of conference peer review involves three constituencies with different objectives: authors want their papers accepted at prestigious venues (and quickly), conferences want to present a program with many high-quality and few low-quality papers, and reviewers want to avoid being overburdened by reviews. These objectives are far from aligned; the key obstacle is that the evaluation of the merits of a submission is inherently noisy. Over the years, conferences have experimented with numerous policies and innovations to navigate the tradeoffs, including varying the number of reviews per submission, requiring prior reviews to be included with resubmissions, and many others. However, the role of authors in these experiments is usually underestimated.

This talk includes two recent studies on how the conference review policy takes advantage of the strategic behaviors of authors. In particular, from the mechanism design point of view, we show that the conference can benefit from authors' private information about the true quality of their own papers with a carefully designed review mechanism. The first part of this talk focuses on modeling the interactions between a conference and authors who decide whether to (re)submit their papers to the prestigious conference or to a sure bet (e.g. arxiv). We show how the conference should set its acceptance threshold to trade-off the conference quality with the review burden, while considering the self-selection of authors. Next, in a setting where one author has multiple submissions, we propose a novel sequential review mechanism. We show that the proposed mechanism can 1) truthfully elicit the ranking of papers in terms of their qualities; 2) reduce review burden and increase the average quality of papers being reviewed; 3) incentivize authors to write fewer papers of higher quality.

Biography:

Yichi Zhang is a PhD candidate at the School of Information, University of Michigan, where he is advised by Grant Schoenebeck. His research interests lie in the intersection of computer science and economics, where he uses both theoretical and empirical approaches to model and understand the incentive problems on multi-agent systems. He aims to design rewarding mechanisms that are both theoretically strong and practical, to motivate effort and honest behaviors for data annotation platforms, peer grading, and peer reviewing. His work has been published on top-tier CS conferences like EC and TheWebConf.

Source: Center on Frontiers of Computing Studies